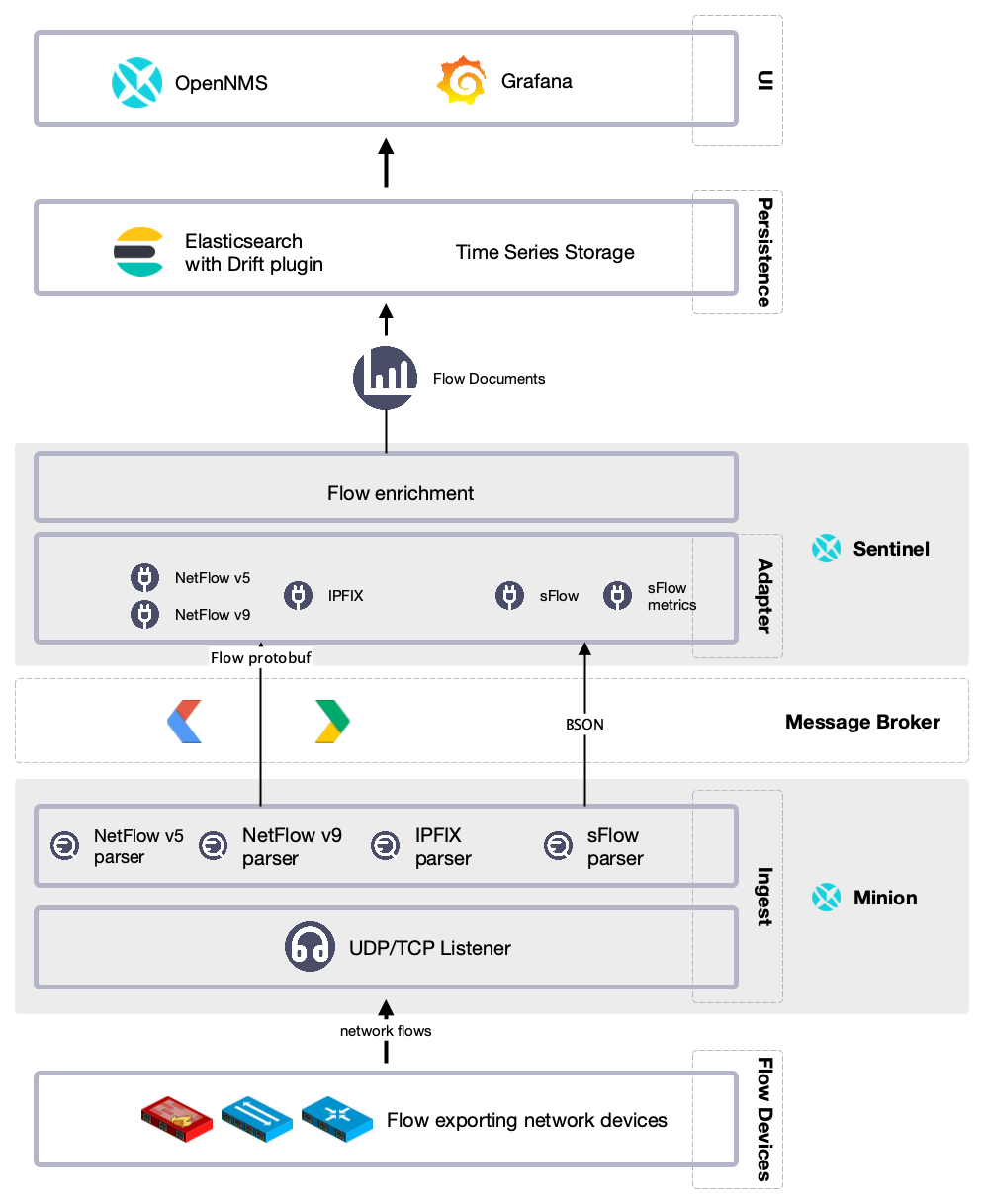

Scale Flow Processing with Sentinel

When your flows data collection volume increases to the point that Meridian is too busy processing flows, you can add Sentinel(s) to do the processing instead. Sentinel can do the following:

-

Persist network flow messages with Sentinel to Elasticsearch

-

Consume flow messages from Minions through a message broker (ActiveMQ or Apache Kafka)

-

Generate and send events to the Meridian core instance via message broker

Before you begin

Make sure you have the following:

-

Basic flows environment set up

-

Sentinel installed on your system

-

PostgreSQL, Elasticsearch, and REST endpoint to Meridian core instance running and reachable from the Sentinel node

-

Message broker (ActiveMQ or Apache Kafka) running and reachable from the Sentinel node

-

Credentials for authentication configured for the REST endpoint in the Meridian core instance, message broker, Elasticsearch, and the PostgreSQL database

You must set configuration in the etc directory relative to the Meridian Sentinel home directory.

Depending on your operating system, the home directory is /usr/share/sentinel for Debian/Ubuntu or /opt/sentinel for CentOS/RHEL.

|

Configure access to PostgreSQL database

ssh -p 8301 admin@localhostconfig:edit org.opennms.netmgt.distributed.datasource

config:property-set datasource.url jdbc:postgresql://postgres-ip:postgres-port/opennms-db-name(1)

config:property-set datasource.username my-db-user(2)

config:property-set datasource.password my-db-password(3)

config:property-set datasource.databaseName opennms-db-name(4)

config:update| 1 | JDBC connection string; replace postgres-ip, postgres-port, and opennms-db-name accordingly. |

| 2 | PostgreSQL user name with read/write access to the opennms-db-name database |

| 3 | PostgreSQL password for my-db-user user |

| 4 | Database name of your Meridian Core instance database |

Configure access to Elasticsearch

ssh -p 8301 admin@localhostconfig:edit org.opennms.features.flows.persistence.elastic

config:property-set elasticUrl http://elastic-ip:9200(1)

config:property-set elasticIndexStrategy hourly(2)

config:property-set settings.index.number_of_replicas 0(3)

config:property-set connTimeout 30000(4)

config:property-set readTimeout 60000(5)

config:update| 1 | Add the URL to the Elasticsearch cluster. |

| 2 | Select an index strategy. |

| 3 | Set number of replicas; 0 is a default. In production you should have at least 1. |

| 4 | Timeout, in milliseconds, Sentinel waits to connect to the Elasticsearch cluster. |

| 5 | Read timeout when data is fetched from the Elasticsearch cluster. |

Set up message broker

sudo vi etc/featuresBoot.d/flows.bootsentinel-jsonstore-postgres

sentinel-blobstore-noop

sentinel-kafka

sentinel-flowsssh -p 8301 admin@localhostconfig:edit org.opennms.sentinel.controller

config:property-set location SENTINEL(1)

config:property-set id 00000000-0000-0000-0000-000000ddba11(2)

config:property-set http-url http://core-instance-ip:8980/opennms(3)

config:update| 1 | A location string is used to assign the Sentinel to a monitoring location with Minions. |

| 2 | Unique identifier used as a node label for monitoring the Sentinel instance within {page-component-title). |

| 3 | Base URL for the web UI, which provides the REST endpoints. |

config:edit org.opennms.core.ipc.sink.kafka.consumer(1)

config:property-set bootstrap.servers my-kafka-ip-1:9092,my-kafka-ip-2:9092(2)

config:update| 1 | Edit the configuration for the flow consumer from Kafka. |

| 2 | Connect to the following Kafka nodes and adjust the IPs or FQDNs with the Kafka port (9092) accordingly. |

config:edit org.opennms.core.ipc.sink.kafka(1)

config:property-set bootstrap.servers my-kafka-ip-1:9092,my-kafka-ip-2:9092(2)

config:update| 1 | Edit the configuration to send generated events from Sentinel via Kafka. |

| 2 | Connect to the following Kafka nodes and adjust the IPs or FQDNs with the Kafka port (9092) accordingly. |

To use a Kafka cluster with multiple Meridian instances, customize the topic prefix by setting group.id, which is by default set to OpenNMS.

You can set a different topic prefix for each instance with config:edit group.id my-group-id for the consumer and sink.

|

opennms:scv-set opennms.http my-sentinel-user my-sentinel-password(1)| 1 | Set the credentials for the REST endpoint created in your Meridian Core instance. |

The credentials are encrypted on disk in ${SENTINEL_HOME}/etc/scv.jce.

Exit the Karaf Shell with Ctrl+d

sudo systemctl restart sentinelopennms:health-checkVerifying the health of the container

Verifying installed bundles [ Success ]

Connecting to Kafka from Sink Producer [ Success ]

Connecting to Kafka from Sink Consumer [ Success ]

Retrieving NodeDao [ Success ]

Connecting to ElasticSearch ReST API (Flows) [ Success ]

Connecting to OpenNMS ReST API [ Success ]

=> Everything is awesomesudo vi etc/featuresBoot.d/flows.bootsentinel-jsonstore-postgres

sentinel-blobstore-noop

sentinel-jms

sentinel-flowsssh -p 8301 admin@localhostconfig:edit org.opennms.sentinel.controller

config:property-set location SENTINEL(1)

config:property-set id 00000000-0000-0000-0000-000000ddba11(2)

config:property-set http-url http://core-instance-ip:8980/opennms(3)

config:property-set broker-url failover:tcp://my-activemq-ip:61616(4)

config:update| 1 | A location string is used to assign the Sentinel to a monitoring location with Minions. |

| 2 | Unique identifier used as a node label for monitoring the Sentinel instance within {page-component-title). |

| 3 | Base URL for the web UI, which provides the REST endpoints. |

| 4 | URL that points to ActiveMQ broker. |

opennms:scv-set opennms.http my-sentinel-user my-sentinel-password(1)

opennms:scv-set opennms.broker my-sentinel-user my-sentinel-password(2)| 1 | Set the credentials for the REST endpoint created in your Meridian Core instance |

| 2 | Set the credentials for the ActiveMQ message broker |

The credentials are encrypted on disk in ${SENTINEL_HOME}/etc/scv.jce.

|

Exit the Karaf Shell with Ctrl+d

sudo systemctl restart sentinelopennms:health-checkVerifying the health of the container

Verifying installed bundles [ Success ]

Retrieving NodeDao [ Success ]

Connecting to JMS Broker [ Success ]

Connecting to ElasticSearch ReST API (Flows) [ Success ]

Connecting to OpenNMS ReST API [ Success ]

=> Everything is awesomeEnable flow processing protocols

ssh -p 8301 admin@localhostconfig:edit --alias netflow5 --factory org.opennms.features.telemetry.adapters

config:property-set name Netflow-5(1)

config:property-set adapters.0.name Netflow-5-Adapter(2)

config:property-set adapters.0.class-name org.opennms.netmgt.telemetry.protocols.netflow.adapter.netflow5.Netflow5Adapter(3)

config:update| 1 | Queue name from which Sentinel will fetch messages.

By default for Meridian components, the queue name convention is Netflow-5. |

| 2 | Set a name for the Netflow v5 adapter. |

| 3 | Assign an adapter to enrich Netflow v5 messages. |

The configuration is persisted with the suffix specified as alias in etc/org.opennms.features.telemetry.adapters-netflow5.cfg.

To process multiple protocols, increase the index 0 in the adapters name and class name accordingly for additional protocols.

|

opennms:health-checkVerifying the health of the container

...

Verifying Adapter Netflow-5-Adapter (org.opennms.netmgt.telemetry.protocols.netflow.adapter.netflow5.Netflow5Adapter) [ Success ]config:edit --alias netflow9 --factory org.opennms.features.telemetry.adapters

config:property-set name Netflow-9(1)

config:property-set adapters.0.name Netflow-9-Adapter(2)

config:property-set adapters.0.class-name org.opennms.netmgt.telemetry.protocols.netflow.adapter.netflow9.Netflow9Adapter(3)

config:update| 1 | Queue name from which Sentinel will fetch messages.

By default for Meridian components, the queue name convention is Netflow-9. |

| 2 | Set a name for the Netflow v9 adapter. |

| 3 | Assign an adapter to enrich Netflow v9 messages. |

The configuration is persisted with the suffix specified as alias in etc/org.opennms.features.telemetry.adapters-netflow9.cfg.

To process multiple protocols, increase the index 0 in the adapters name and class name accordingly for additional protocols.

|

opennms:health-checkVerifying the health of the container

...

Verifying Adapter Netflow-9-Adapter (org.opennms.netmgt.telemetry.protocols.netflow.adapter.netflow9.Netflow9Adapter) [ Success ]config:edit --alias sflow --factory org.opennms.features.telemetry.listeners

config:property-set name SFlow(1)

config:property-set adapters.0.name SFlow-Adapter(2)

config:property-set adapters.0.class-name org.opennms.netmgt.telemetry.protocols.sflow.adapter.SFlowAdapter(3)

config:update| 1 | Queue name from which Sentinel will fetch messages.

By default for Meridian components, the queue name convention is SFlow. |

| 2 | Set a name for the sFlow adapter. |

| 3 | Assign an adapter to enrich sFlow messages. |

The configuration is persisted with the suffix specified as alias in etc/org.opennms.features.telemetry.adapters-sflow.cfg.

To process multiple protocols, increase the index 0 in the adapters name and class name accordingly for additional protocols.

|

opennms:health-checkVerifying the health of the container

...

Verifying Adapter SFlow-Adapter (org.opennms.netmgt.telemetry.protocols.sflow.adapter.SFlowAdapter) [ Success ]config:edit --alias ipfix --factory org.opennms.features.telemetry.listeners

config:property-set name IPFIX(1)

config:property-set adapters.0.name IPFIX-Adapter(2)

config:property-set adapters.0.class-name org.opennms.netmgt.telemetry.protocols.netflow.adapter.ipfix.IpfixAdapter(3)

config:update| 1 | Queue name from which Sentinel will fetch messages.

By default, for Meridian components, the queue name convention is IPFIX. |

| 2 | Set a name for the IPFIX adapter. |

| 3 | Assign an adapter to enrich IPFIX messages. |

The configuration is persisted with the suffix specified as alias in etc/org.opennms.features.telemetry.adapters-ipfix.cfg.

To process multiple protocols, increase the index 0 in the adapters name and class name accordingly for additional protocols.

|

opennms:health-checkVerifying the health of the container

...

Verifying Adapter IPFIX-Adapter (org.opennms.netmgt.telemetry.protocols.netflow.adapter.ipfix.IpfixAdapter) [ Success ]